Commands Enable PromptQL to Modify Data

Introduction

Commands are a crucial component that enable PromptQL to take action on your data. While PromptQL can intelligently query your data through natural language conversations, commands allow it to go further by modifying data (inserts, updates, deletes), executing complex operations, or implementing custom business logic across your connected data sources.

When a user requests changes to their data through PromptQL, the system dynamically builds a query plan that incorporates these commands as part of its execution strategy, providing high accuracy and detailed explanations of each action taken.

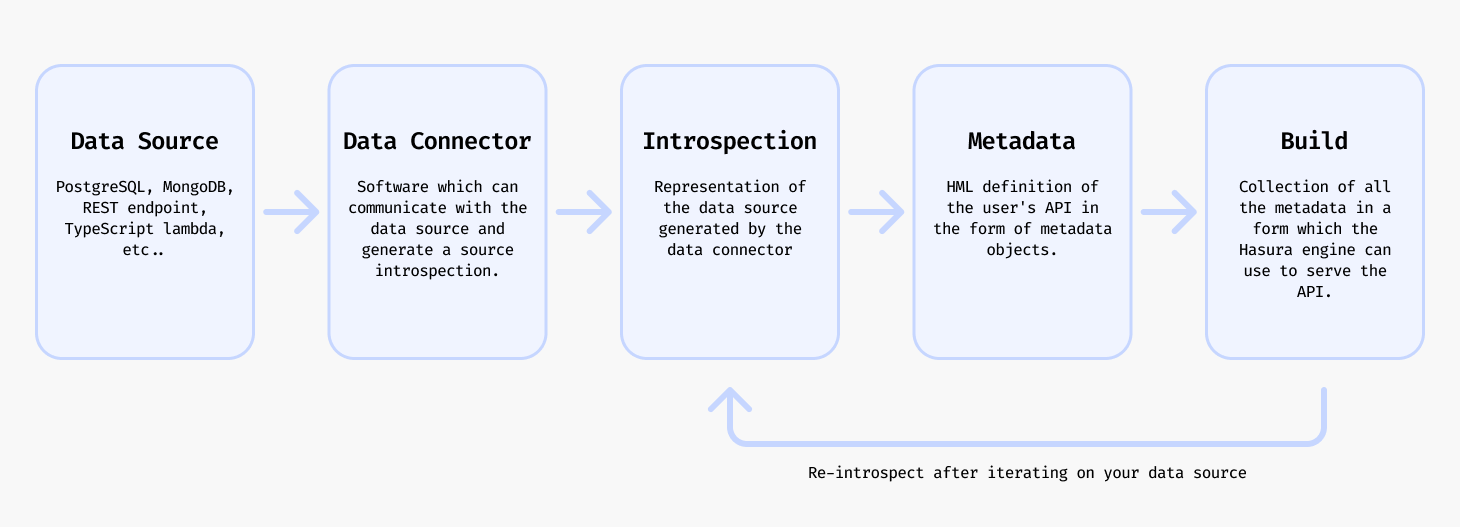

Lifecycle

When setting up commands for use with PromptQL, follow this lifecycle:

- Have some operation in your data source that you want to make executable via PromptQL.

- Introspect your data source using the DDN CLI with the relevant data connector to fetch the operation resources.

- Add the command to your metadata with the DDN CLI.

- Create a build of your supergraph API with the DDN CLI.

- Serve your build as your API with the Hasura engine either locally or in the cloud.

- Interact with your data through PromptQL, which can now execute these commands as part of its dynamic query planning.

Create a command

To add a command you will need to have a data connector already set up and connected to the data source. Follow the Quickstart or the tutorial in How to Build with DDN to get to that point.

From a source operation

Some data connectors support the ability to introspect your data source to discover the commands that can be added to your supergraph automatically.

ddn connector introspect <connector_name>

ddn connector show-resources <connector_name>

ddn command add <connector_link_name> <operation_name>

Or you can optionally add all the commands by specifying "*".

ddn command add <connector_link_name> "*"

This will add commands with their accompanying metadata definitions to your metadata.

Via native operations

Some data connectors support the ability to add commands via native operations so that you can add any operation that is not supported by the automatic introspection process.

For classic database connectors, this will be native query code for that source. This can be, for example, a more complex read operation or a way to run custom business logic, which PromptQL can incorporate into its query plan.

For Lambda connectors, eg: (TypeScript, Go, Python, etc) this will be a function (read-only) or procedure (mutation or other side-effects) that PromptQL can use to execute specialized operations on your data.

- Node.js TypeScript

- Python

- Go

You can create custom TypeScript functions in your connector to expand PromptQL's capabilities, enabling it to interact with your data in more sophisticated ways through natural language conversations.

ddn connector init my_ts -i

/**

* @readonly

*/

export function myCustomCode(myInput: string): string {

// Do something with the input

return "My output";

}

The @readonly tag indicates this function is a read-only operation. PromptQL will recognize this as a data retrieval

function when building query plans. Without this tag, PromptQL will treat it as an action that can modify data.

ddn connector introspect my_ts

ddn command add my_ts myCustomCode

ddn supergraph build local

ddn run docker-start

ddn console --local

Once set up, you can interact with your custom function through PromptQL conversations.

By adding custom functions to your supergraph, you extend PromptQL's capabilities to talk to your data in ways specific to your business needs.

You can create Python connectors with custom code to enhance PromptQL's ability to talk to all your data. These connectors become available in your supergraph, allowing PromptQL to include them in its dynamic query plans.

ddn connector init my_py -i

from hasura_ndc import start

from hasura_ndc.function_connector import FunctionConnector

connector = FunctionConnector()

@connector.register_query

def my_custom_code(my_input: str) -> str:

# Do something with the input

return "My output"

if __name__ == "__main__":

start(connector)

When you add the @connector.register_query decorator, you're creating a function that PromptQL can access as part of

its query planning. If you use @connector.register_mutation instead, the function will be available for data

modification operations in PromptQL's query plans.

ddn connector introspect my_py

ddn command add my_py my_custom_code

ddn supergraph build local

ddn run docker-start

ddn console --local

query MyCustomCode {

myCustomCode(myInput: "My input")

}

By extending your supergraph with custom Python connectors, you give PromptQL the ability to perform specialized operations that go beyond standard data access, enabling more powerful and flexible interactions with your data.

You can run whatever arbitrary code you want in your Go connector and expose it for PromptQL to access, enabling your AI agents to accurately interact with your custom business logic.

ddn connector init my_go -i

package functions

import (

"context"

"hasura-ndc.dev/ndc-go/types"

)

// InputArguments represents the input of the native operation.

type InputArguments struct {

MyInput string `json:"myInput"`

}

// OutputResult represents the output of the native operation.

type OutputResult struct {

MyOutput string `json:"myOutput"`

}

// ProcedureCustomCode is a native operation that can be called via PromptQL.

func ProcedureCustomCode(ctx context.Context, state *types.State, arguments *InputArguments) (*OutputResult, error) {

// Do something with the input

return &OutputResult{

MyOutput: "My output",

}, nil

}

Using the prefix Procedure ensures ProcedureCustomCode() is exposed as a mutation in your supergraph. Prefixing with

Function identifies it as a function to be exposed as a query. Both will be accessible through PromptQL.

Both have typed input arguments and return strings, which the connector will use to generate the corresponding schema for your supergraph.

ddn connector introspect my_go

ddn command add my_go customCode

ddn supergraph build local

ddn run docker-start

ddn console --local

Once your connector and custom function are set up, you can use PromptQL to talk to your data using natural language.

Once set up, PromptQL will automatically consider these commands when generating query plans based on user requests, enabling accurate data modifications and complex operations through natural language interactions.

Update a command

When your underlying data source changes, you'll need to update the commands available to PromptQL to ensure continued accuracy in its operations:

ddn connector introspect <connector_name>

ddn command update <connector_link_name> <command_name>

You will see an output which explains how new resources were added or updated in the command.

After updating, PromptQL will automatically incorporate these changes into its query planning, ensuring that user interactions with their data remain accurate and up-to-date.

You can also update the command by editing the command's metadata manually.

Delete a command

ddn command remove <command_name>

Along with the command itself, the associated metadata is also removed, and PromptQL will no longer include this command in its query planning.

Reference

You can learn more about commands in the metadata reference docs.