With Persistent Datasources

The content of this guide is identical to our quickstart. It provides you with a quick and easy guide to get up-and-running with single datasource while also incorporating custom business logic so that PromptQL can take action on a user's behalf.

You'll use a hosted PostgreSQL database pre-loaded with IMDb movie data. You'll be able to chat with the data to explore information about different movies. Then, you'll add custom business logic that lets PromptQL take action — like renting a movie on a user's behalf.

Prerequisites

Install the DDN CLI

To use this guide, ensure you've installed/updated your CLI to at least v2.28.0.

- macOS and Linux

- Windows

Simply run the installer script in your terminal:

curl -L https://graphql-engine-cdn.hasura.io/ddn/cli/v4/get.sh | bash

Currently, the CLI does not support installation on ARM-based Linux systems.

- Download the latest DDN CLI installer for Windows.

- Run the

DDN_CLI_Setup.exeinstaller file and follow the instructions. This will only take a minute. - By default, the DDN CLI is installed under

C:\Users\{Username}\AppData\Local\Programs\DDN_CLI - The DDN CLI is added to your

%PATH%environment variable so that you can use theddncommand from your terminal.

Install Docker

The Docker-based workflow helps you iterate and develop locally without deploying any changes to Hasura DDN, making the

development experience faster and your feedback loops shorter. You'll need Docker Compose v2.20 or later.

Validate the installation

You can verify that the DDN CLI is installed correctly by running:

ddn doctor

Tutorial

Step 1. Authenticate your CLI

ddn auth login

This will launch a browser window prompting you to log in or sign up for Hasura Cloud. After you log in, the CLI will acknowledge your login, giving you access to Hasura Cloud resources.

Step 2. Scaffold out a new local project

ddn supergraph init imdb-promptflix --with-promptql && cd imdb-promptflix

Now that you're in this directory, you'll see your project scaffolded out for you. You can view the structure by either

running ls in your terminal, or by opening the directory in your preferred editor.

Step 3. Initialize your PostgreSQL connector

ddn connector init imdb -i

From the dropdown, select hasura/postgres-promptql (you can type to filter the list). Then, enter the following

connection URI to an existing PostgreSQL database of IMDb data:

jdbc:postgresql://35.236.11.122:5432/imdb?user=read_only_user&password=readonlyuser

For the JDBC_SCHEMAS environment variable, enter the following:

public

Step 4. Introspect the PostgreSQL database

ddn connector introspect imdb

After running this, you should see a representation of your database's schema in the

app/connector/imdb/configuration.json file; you can view this using cat or open the file in your editor.

Step 5. Track your tables

Based on our sample database, a SQL schema will be generated. Let's track all the models to get started quickly.

ddn model add imdb "*"

Open the app/metadata directory. You'll find Hasura Metadata Language (HML) files for each table in your database.

In this case, there's only one: public_movies.hml.

The DDN CLI uses these HML files to represent PostgreSQL tables in your API as models.

Step 6. Create a new build

ddn supergraph build local

The build is stored as a set of JSON files in engine/build.

Step 7. Start your local services

ddn run docker-start

Your terminal will be taken over by logs for the different services.

Talk to your data

Step 1. Open the PromptQL Playground

ddn console --local

Step 2. Ask questions about your dataset

The console is a web app hosted at promptql.console.hasura.io that connects to

your local PromptQL API and data sources. Your data is processed in the DDN PromptQL runtime but isn't persisted

externally.

Head over to the console and ask a few questions about your data.

Hi, what are some questions you can answer?

PromptQL will respond with information about the dataset and make suggestions for your first query on the data!

Go ahead and ask a question! In the next steps, we'll take a deeper look at interacting with the data.

Act on your data

Step 1. Create a command

While it's great that we can ask questions about the data set, what if the application could take action on our behalf? Such as rent a movie for us? We can easily do this by adding custom business logic using one of our lambda connectors. In the example below, we'll use TypeScript.

ddn connector init promptflix -i

From the list of choices, select hasura/nodejs. Like with the PostgreSQL connector, the CLI will scaffold out a set of

configuration files, including a file called app/connector/promptflix/functions.ts — this is where we'll add our logic

so the application can take action on our behalf.

/*

* This interface defines the structure of the response object returned by the

* function that rents a movie. It includes a success status and a message.

* The success status is a boolean indicating whether the operation was

* successful or not.

*/

interface RentMovieResponse {

success: boolean;

message: string;

}

/*

* PromptFlix - A movie rental service

*

* @param {string} seriesTitle - The title of the movie series to rent

* @returns {RentMovieResponse} - The response object containing success status and message

*/

export function rentSingleMovieBySeriesTitle(seriesTitle: string): RentMovieResponse {

console.log(`Renting movie series: ${seriesTitle}`);

return {

success: true,

message: `Successfully rented the movie series: ${seriesTitle}`,

};

}

This function simulates the action of renting a movie on behalf of a user. The JSDoc comments aid in providing context to the underlying LLM so that the application knows how to use the function.

ddn connector introspect promptflix

ddn command add promptflix "*"

Before we create a new build, let's add a description to the metadata for our command located in

app/metadata/rent_single_movie_by_series_title.hml

---

kind: Command

version: v1

definition:

name: rent_single_movie_by_series_title

description:

This function allows users to rent movies from PromptFlix and should be used for any request to rent a movie.

outputType: rent_movie_response!

arguments:

- name: series_title

type: String!

source:

dataConnectorName: promptflix

dataConnectorCommand:

procedure: rentSingleMovieBySeriesTitle

argumentMapping:

series_title: seriesTitle

graphql:

rootFieldName: rent_single_movie_by_series_title

rootFieldKind: Mutation

ddn supergraph build local

ddn run docker-start

Step 2. Approve an action

I really like Apollo 13; can you recommend a single movie with the same actors that is a historical story?

PromptQL will analyze the dataset by classifying movies and will suggest Saving Private Ryan. Likely, it will also ask

if you'd like to rent it. Answer yes and you'll then be prompted to either Deny or Approve the action.

If you click Approve, PromptQL will confirm that the rental was successful! 🍿

Deploy and share your project

Step 1. Create a new cloud build

Until this point, we've been developing locally. However, we can easily create a cloud build of our project and then share it with others.

ddn supergraph build create

The CLI will respond with information about your new build.

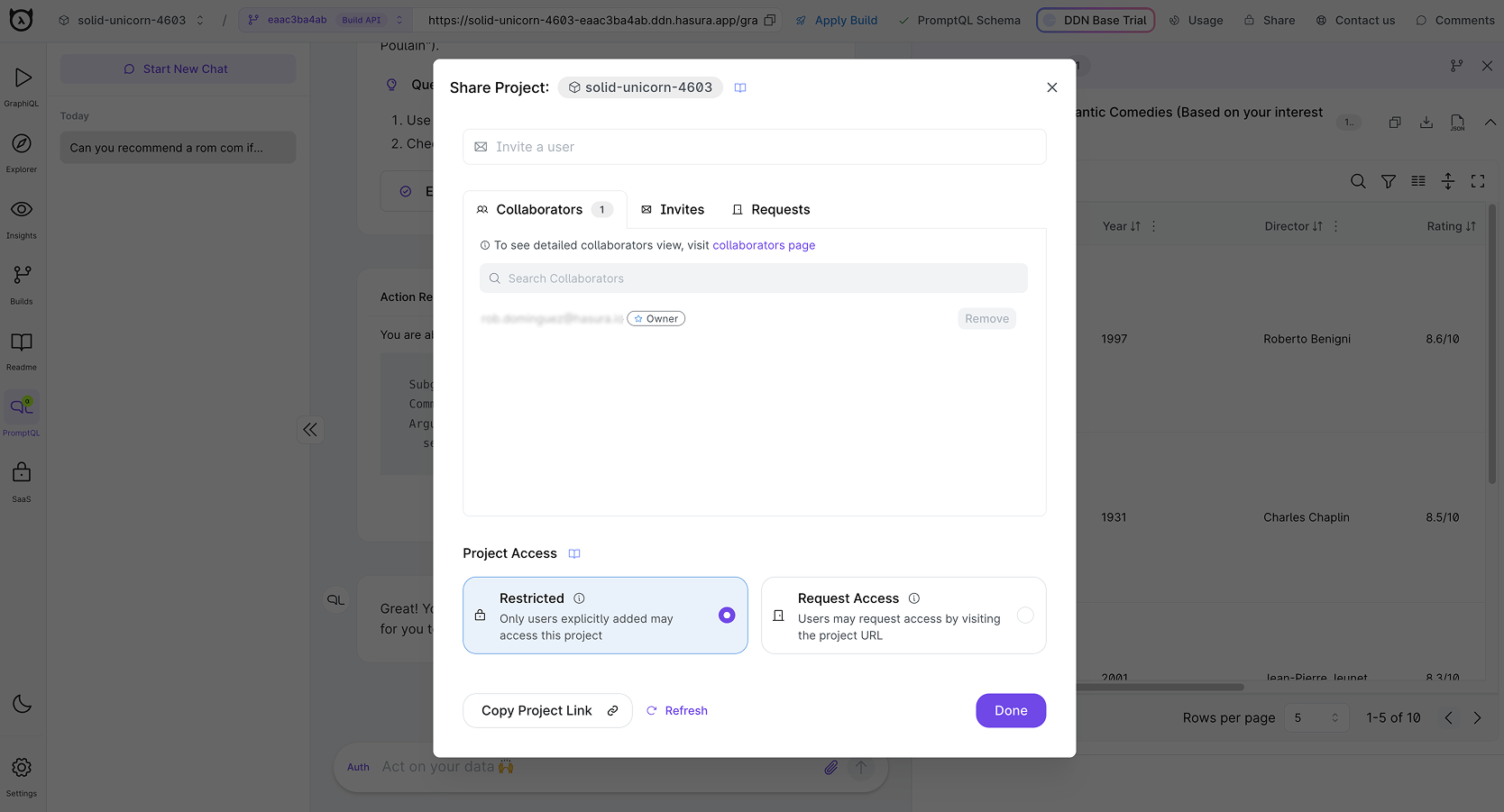

Step 2. Share your project

You can visit the cloud project's console and share your new PromptQL app!

Users with any access level, including “Read only” can access your PromptQL app. Read only users cannot modify your project or invite additional users.

You can also choose “Request Access” so that anyone who arrives at the project URL can request access.