Moderate User-Generated Content with ChatGPT

Introduction

Using Event Triggers allows you to call a webhook with a contextual payload whenever a specific event occurs in your database. In this recipe,

we'll create an Event Trigger that will fire whenever a new review is inserted into our reviews table. We'll then send

it to ChatGPT to determine if the review contains inappropriate content. If it

does, we'll mark the review as unavailable and send a notification to the user.

DOCS E-COMMERCE SAMPLE APP

This quickstart/recipe is dependent upon the docs e-commerce sample app. If you haven't already deployed the sample app, you can do so with one click below. If you've already deployed the sample app, simply use your existing project.

Prerequisites

Before getting started, ensure that you have the following in place:

- The docs e-commerce sample app deployed to Hasura Cloud.

- An OpenAI API key.

If you plan on using a webhook endpoint hosted on your own machine, ensure that you have a tunneling service such as ngrok set up so that your Cloud Project can communicate with your local machine.

Our model

Event Triggers are designed to run when specific operations occur on a table, such as insertions, updates, and deletions. When architecting your own Event Trigger, you need to consider the following:

- Which table's changes will initiate the Event Trigger?

- Which operation(s) on that table will initiate the Event Trigger?

- What should my webhook do with the data it receives?

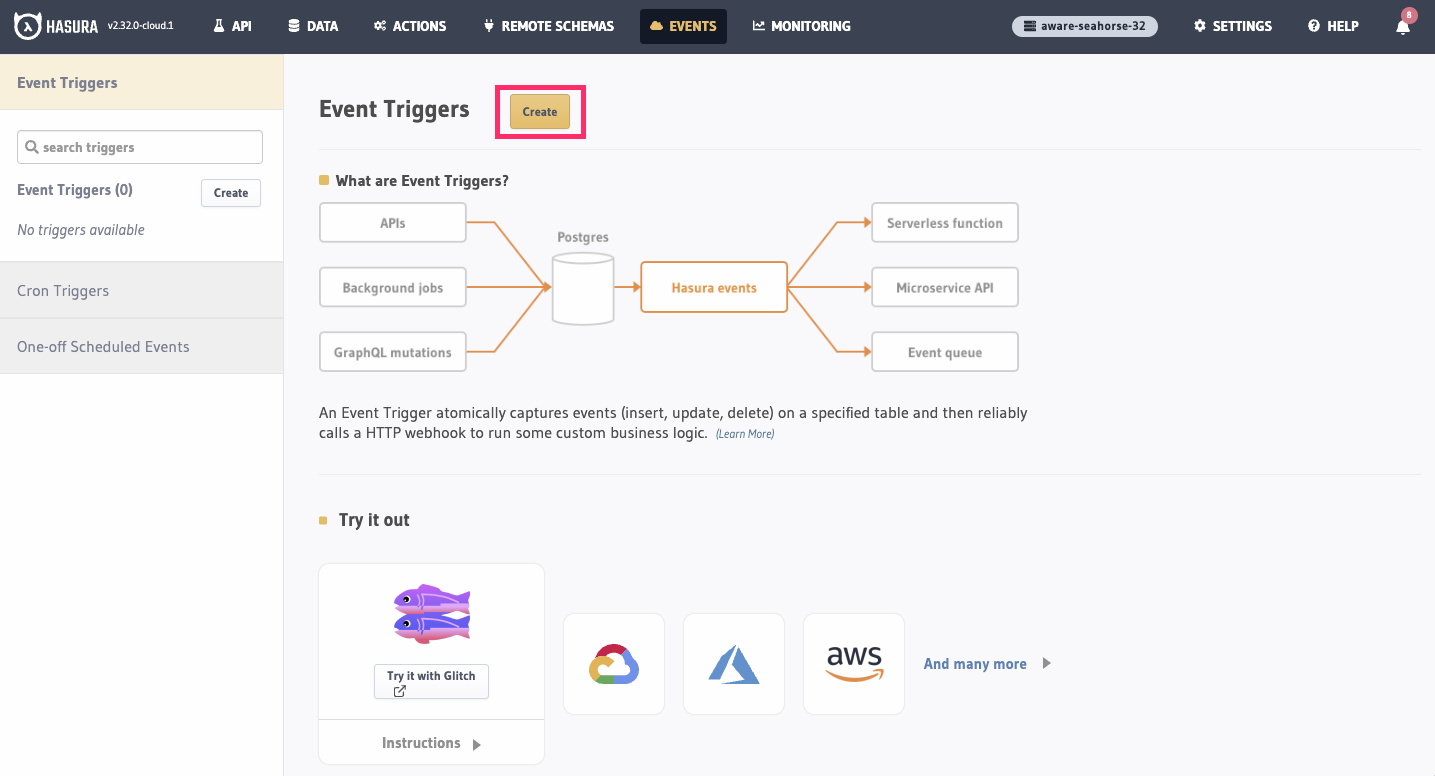

Step 1: Create the Event Trigger

Head to the Events tab of the Hasura Console and click Create:

Step 2: Configure the Event Trigger

First, provide a name for your trigger, e.g., moderate_product_review. Then, enter a webhook URL that will be called

when the event is triggered. This webhook will be responsible for sending the new review to ChatGPT and determining

whether or not its content is appropriate; it can be hosted anywhere, and written in any language you like.

The route on our webhook we'll use is /check-review. Below, we'll see what this looks like with a service like

ngrok, but the format will follow this template:

https://<your-webhook-url>/check-review

Since our project is running on Hasura Cloud, and our handler will run on our local machine, we'll use ngrok to expose the webhook endpoint to the internet. This will allow us to expose a public URL that will forward requests to our local machine and the server we'll configure below.

You'll need to modify your webhook URL to use the public URL provided by ngrok.

After installing ngrok and authenticating, you can do this by running:

ngrok http 4000

Then, copy the Forwarding value for use in our webhook 🎉

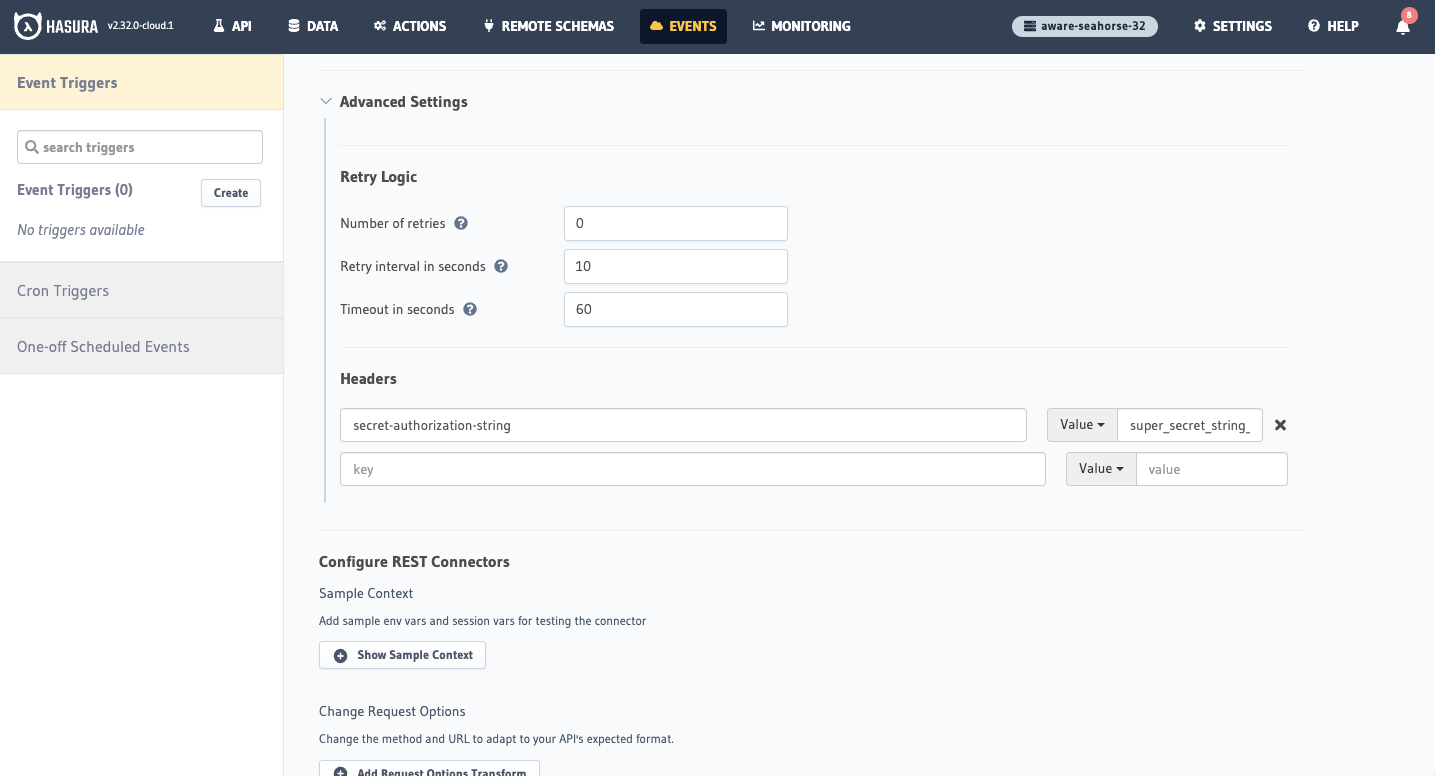

Under Advanced Settings, we can configure the headers that will be sent with the request. We'll add an

authentication header to prevent abuse of the endpoint and ensure that only Hasura can trigger the event. Set the

Key as secret-authorization-string and the Value as super_secret_string_123:

Before exiting, open the Add Request Options Transform section and check POST. Then, click Create Event Trigger.

Step 3: Create a webhook to handle the request

Whenever new data is inserted into our reviews table, the Event Trigger fires. Hasura will send a request to the

webhook URL you provided. In this example, we're simply going to send a POST request. Our webhook will parse the

request, ensure the header is correct, and then pass our data to ChatGPT. Depending on the response from ChatGPT, we'll

either allow the review to be visible, or we'll mark it as flagged and send a notification to the user.

A large part of creating accurate and useful machine learning models is providing them with the right data. Much of that comes down to how you engineer your prompt. This can take some experimentation, but this is the prompt that we use in the webhook's code below:

You are a content moderator for SuperStore.com. A customer has left a review for a product they purchased. Your response should only be a JSON object with two properties: "feedback" and "is_appropriate". The "feedback" property should be a string containing your response to the customer only if the review "is_appropriate" value is false. The feedback should be on why their review was flagged as inappropriate, not a response to their review. The "is_appropriate" property should be a boolean indicating whether or not the review contains inappropriate content. The review is as follows:

Event Triggers sent by Hasura to your webhook as a request include a payload with event

data nested inside the body object of the request. This event object can then be parsed and values extracted from it

to be used in your webhook.

Below, we've written an example of webhook in JavaScript that uses body-parser to parse the request. As we established

earlier, this runs on port 4000. If you're attempting to run this locally, follow the instructions below. If you're

running this in a hosted environment, use this code as a guide to write your own webhook.

- JavaScript

- Python

Init a new project with npm init and install the following dependencies:

npm install express body-parser openai

Then, create a new file called index.js, add the following code, and update the config values:

const express = require('express');

const bodyParser = require('body-parser');

const openai = require('openai');

// Hasura and OpenAI config

const config = {

url: '<YOUR_PROJECT_ENDPOINT>',

secret: '<YOUR_ADMIN_SECRET>',

openAIKey: '<YOUR_OPENAI_KEY>',

};

// OpenAI API config and client

const newOpenAI = new openai.OpenAI({

apiKey: config.openAIKey,

});

const prompt = `You are a content moderator for SuperStore.com. A customer has left a review for a product they purchased. Your response should only be a JSON object with two properties: "feedback" and "is_appropriate". The "feedback" property should be a string containing your response to the customer only if the review "is_appropriate" value is false. The feedback should be on why their review was flagged as inappropriate, not a response to their review. The "is_appropriate" property should be a boolean indicating whether or not the review contains inappropriate content. The review is as follows:`;

// Send a request to ChatGPT to see if the review contains inappropriate content

async function checkReviewWithChatGPT(reviewText) {

try {

const moderationReport = await newOpenAI.chat.completions.create({

model: 'gpt-3.5-turbo',

messages: [

{

role: 'user',

content: `${prompt} ${reviewText}}`,

},

],

});

return JSON.parse(moderationReport.choices[0].message.content);

} catch (err) {

return err;

}

}

// Mark their review as visible if there's no feedback

async function markReviewAsVisible(userReview, reviewId) {

const response = await fetch(config.url, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'x-hasura-admin-secret': config.secret,

},

body: JSON.stringify({

query: `

mutation UpdateReviewToVisible($review_id: uuid!) {

update_reviews_by_pk(pk_columns: {id: $review_id}, _set: {is_visible: true}) {

id

}

}

`,

variables: {

review_id: reviewId,

},

}),

});

console.log(`🎉 Review approved: ${userReview}`);

const { data } = await response.json();

return data.update_reviews_by_pk;

}

// Send a notification to the user if their review is flagged

async function sendNotification(userReview, userId, reviewFeedback) {

const response = await fetch(config.url, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'x-hasura-admin-secret': config.secret,

},

body: JSON.stringify({

query: `

mutation InsertNotification($user_id: uuid!, $review_feedback: String!) {

insert_notifications_one(object: {user_id: $user_id, message: $review_feedback}) {

id

}

}

`,

variables: {

user_id: userId,

review_feedback: reviewFeedback,

},

}),

});

console.log(

`🚩 Review flagged. This is not visible to users: ${userReview}\n🔔 The user has received the following notification: ${reviewFeedback}`

);

const { data } = await response.json();

return data.insert_notifications_one;

}

const app = express();

app.use(bodyParser.json());

app.use(bodyParser.urlencoded({ extended: true }));

// Our route for the webhook

app.post('/check-review', async (req, res) => {

// confirm the auth header is correct — ideally, you'd keep the secret in an environment variable

const authHeader = req.headers['secret-authorization-string'];

if (authHeader !== 'super_secret_string_123') {

return res.status(401).json({

message: 'Unauthorized',

});

}

// we'll parse the review from the event payload

const userReview = req.body.event.data.new.text;

const userId = req.body.event.data.new.user_id;

// Then check the review with ChatGPT

const moderationReport = await checkReviewWithChatGPT(userReview);

// if the review is appropriate, mark it as visible; if not, send a notification to the user

if (moderationReport.is_appropriate) {

await markReviewAsVisible(userReview, req.body.event.data.new.id);

} else {

await sendNotification(userReview, userId, moderationReport.feedback);

}

// Return a JSON response to the client

res.json({

GPTResponse: moderationReport,

});

});

// Start the server

app.listen(4000, () => {

console.log('Server started on port 4000');

});

You can run the server by running node index.js in your terminal.

Make sure you have the necessary dependencies installed. You can use pip to install them:

pip install Flask[async] openai requests

Then, create a new file called index.py and add the following code:

from flask import Flask, request, jsonify

import openai

import requests

import json

app = Flask(__name__)

# Hasura and OpenAI config

config = {

'url': '<YOUR_PROJECT_ENDPOINT>',

'secret': '<YOUR_ADMIN_SECRET>',

'openAIKey': '<YOUR_OPENAI_KEY>',

}

# OpenAI API config and client

openai.api_key = config["openAIKey"]

prompt = (

"You are a content moderator for SuperStore.com. A customer has left a review for a product they purchased. "

'Your response should only be a JSON object with two properties: "feedback" and "is_appropriate". '

'The "feedback" property should be a string containing your response to the customer only if the review "is_appropriate" value is false. '

"The feedback should be on why their review was flagged as inappropriate, not a response to their review. "

'The "is_appropriate" property should be a boolean indicating whether or not the review contains inappropriate content and it should be set by you.'

'"is_appropriate" is set to TRUE for appropriate content and to FALSE for inappropriate content.'

"The review is as follows:"

)

# Send a request to ChatGPT to see if the review contains inappropriate content

def check_review_with_chat_gpt(review_text):

try:

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": prompt},

{"role": "user", "content": review_text},

],

)

response_content = response["choices"][0]["message"]["content"]

return json.loads(response_content)

except Exception as e:

print(f"Error evaluating content: {review_text}")

print(str(e))

return None

# Mark their review as visible if there's no feedback

async def mark_review_as_visible(user_review, review_id):

response = requests.post(

config["url"],

json={

"query": """

mutation UpdateReviewToVisible($review_id: uuid!) {

update_reviews_by_pk(pk_columns: {id: $review_id}, _set: {is_visible: true}) {

id

}

}

""",

"variables": {

"review_id": review_id,

},

},

headers={

"Content-Type": "application/json",

"x-hasura-admin-secret": config["secret"],

},

)

print(f"🎉 Review approved: {user_review}")

data = response.json()

return data.get("update_reviews_by_pk", None)

# Send a notification to the user if their review is flagged

def send_notification(user_review, user_id, review_feedback):

query = """

mutation InsertNotification($user_id: uuid!, $review_feedback: String!) {

insert_notifications_one(object: {user_id: $user_id, message: $review_feedback}) {

id

}

}

"""

variables = {"user_id": user_id, "review_feedback": review_feedback}

headers = {

"Content-Type": "application/json",

"x-hasura-admin-secret": config["secret"],

}

url = config["url"]

request_body = {"query": query, "variables": variables}

try:

response = requests.post(url, json=request_body, headers=headers)

# Raise an error for bad responses

response.raise_for_status()

response_json = response.json()

if "errors" in response_json:

# Handle the case where there are errors in the response

print(f"Failed to send a notification for: {user_review}")

print(response_json)

return None

# Extract the updated data from the response

data = response_json.get("data", {})

notification = data.get("insert_notifications_one", {})

print(

f"🚩 Review flagged. This is not visible to users: {user_review}\n🔔 The user has received the following notification: {review_feedback}"

)

return notification

except Exception as e:

# Handle exceptions or network errors

print(f"Error sending a notification for: {user_review}")

print(str(e))

return None

@app.route("/check-review", methods=["POST"])

async def check_review():

auth_header = request.headers.get("secret-authorization-string")

if auth_header != "super_secret_string_123":

return jsonify({"message": "Unauthorized"}), 401

# Parse the review from the event payload

data = request.get_json()

user_review = data["event"]["data"]["new"]["text"]

user_id = data["event"]["data"]["new"]["user_id"]

review_id = data["event"]["data"]["new"]["id"]

# Check the review with ChatGPT

moderation_report = check_review_with_chat_gpt(user_review)

# If the review is appropriate, mark it as visible; if not, send a notification to the user

if moderation_report["is_appropriate"]:

await mark_review_as_visible(user_review, review_id)

else:

send_notification(user_review, user_id, moderation_report["feedback"])

return jsonify({"GPTResponse": moderation_report})

if __name__ == "__main__":

app.run(port=4000)

You can run the server by running python3 index.py in your terminal.

If you see the message Webhook server is running on port 4000, you're good to go!

Step 4: Test the setup

Testing with appropriate content

With your server running, Hasura should be able to hit the endpoint. We can test this by inserting a new row into our

reviews table. Let's do this with the following mutation from the API tab of the Console:

mutation InsertReview {

insert_reviews_one(

object: {

product_id: "7992fdfa-65b5-11ed-8612-6a8b11ef7372"

user_id: "7cf0a66c-65b7-11ed-b904-fb49f034fbbb"

text: "I love this shirt! It's so comfortable and easy to wear."

rating: 5

}

) {

id

}

}

As this review doesn't contain any questionable content, it should be marked as visible. We should see output similar to this in our terminal:

🎉 Review approved: I love this shirt! It's so comfortable and easy to wear.

Testing with inappropriate content

Now, let's try inserting a review that contains inappropriate content. We'll do this by running the following mutation in our Hasura Console:

mutation InsertReview {

insert_reviews_one(

object: {

product_id: "7992fdfa-65b5-11ed-8612-6a8b11ef7372"

user_id: "7cf0a66c-65b7-11ed-b904-fb49f034fbbb"

text: "<Something you would expect to be flagged>"

rating: 1

}

) {

id

}

}

While this mutation will succeed and insert the review into our database, it will not be marked as visible. Our terminal will return something like this:

🚩 Review flagged. This is not visible to users: <INAPPROPRIATE REVIEW>

🔔 The user has received the following notification: Your review has been flagged as inappropriate due to <CHATGPT'S RHETORIC>.

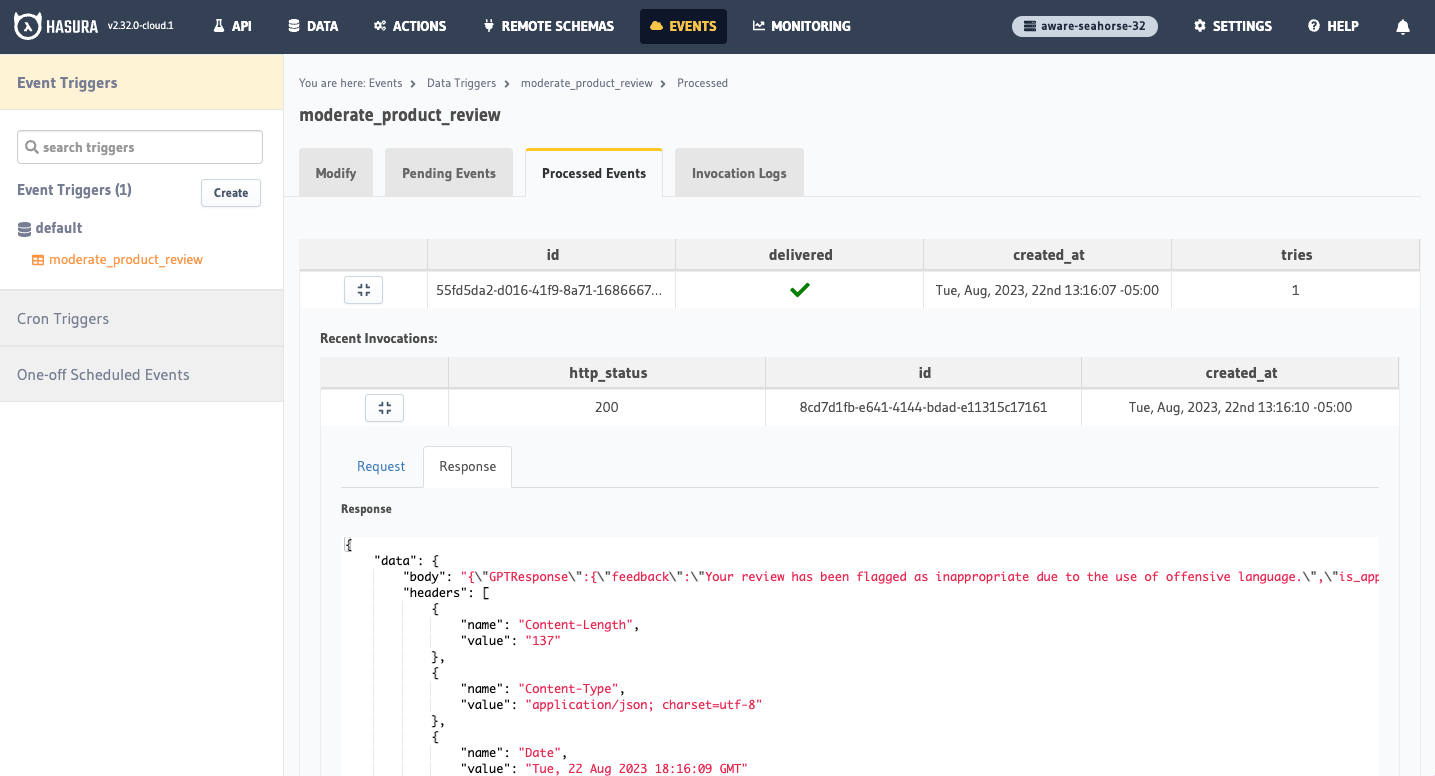

And we can head to the Events tab and see the response from this newest mutation:

Additionally, Hasura created a new notification for the user, alerting them to the status of their review based on the

response from ChatGPT. You can find this by heading to the Data tab and clicking on the notifications table.

Feel free to customize the webhook implementation based on your specific requirements and identified need for a bot. Remember to handle error scenarios, implement necessary validations, and add appropriate security measures to your webhook endpoint.