Commands Modify Data

Introduction

In DDN, commands represent operations in your API that can be executed such as those which modify data in your data sources, (inserts, updates, deletes), or that perform complex read operations or execute custom business logic.

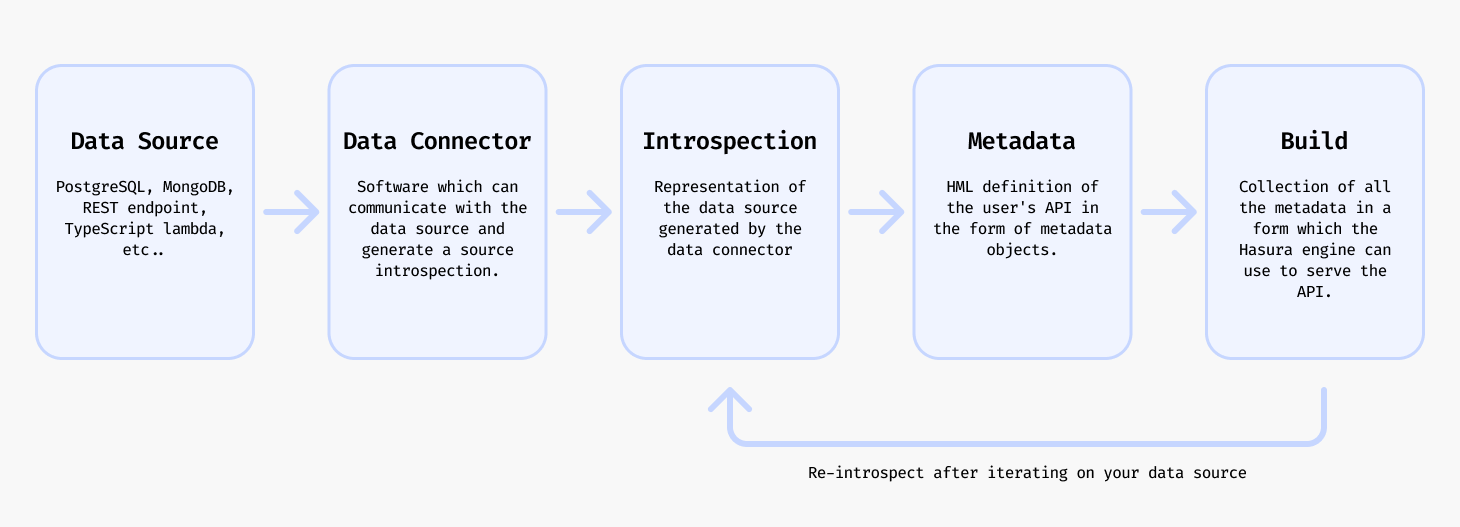

Lifecycle

The lifecycle in creating a command in your metadata is as follows:

- Have some operation in your data source that you want to make executable via your API.

- Introspect your data source using the DDN CLI with the relevant data connector to fetch the operation resources.

- Add the command to your metadata with the DDN CLI.

- Create a build of your supergraph API with the DDN CLI.

- Serve your build as your API with the Hasura engine either locally or in the cloud.

- Iterate on your API by repeating this process or by editing your metadata manually as needed.

Create a command

To add a command you will need to have a data connector already set up and connected to the data source. Follow the Quickstart or the tutorial in How to Build with DDN to get to that point.

From a source operation

Some data connectors support the ability to introspect your data source to discover the commands that can be added to your supergraph automatically.

ddn connector introspect <connector_name>

ddn connector show-resources <connector_name>

ddn command add <connector_link_name> <operation_name>

Or you can optionally add all the commands by specifying "*".

ddn command add <connector_link_name> "*"

This will add commands with their accompanying metadata definitions to your metadata.

Via native operations

Some data connectors support the ability to add commands via native operations so that you can add any operation that is not supported by the automatic introspection process.

For classic database connectors, this will be native query code for that source. This can be, for example, a more complex read operation or a way to run custom business logic, which can be exposed as queries or mutations in the GraphQL API.

For Lambda connectors, eg: (TypeScript, Go, Python, etc) this will be a function (read-only) or procedure (mutation or other side-effects) that can also be exposed as a query or mutation in the GraphQL API.

- PostgreSQL

- MongoDB

- ClickHouse

- Node.js TypeScript

- Python

- Go

The process of creating a native operation for PostgreSQL is the following.

Within your connector's directory, you can add a new file with a .sql extension to define a native operation.

Then, use the PostgreSQL connector's plugin to add the native operation to your connector's configuration:

ddn connector plugin \

--connector <subgraph_name>/<path-to-your-connector>/connector.yaml \

-- \

native-operation create \

--operation-path <subgraph_name>/<path-to-your-connector>/native-operations/<operation_type>/<operation_name>.sql \

--kind mutation

By specifying the --kind mutation flag, you are indicating that the operation is a mutation. If you specify

--kind query, the operation will be a query.

ddn connector introspect <connector_name>

ddn connector show-resources <connector_name>

ddn command add <connector_name> <mutation_name>

ddn supergraph build local

ddn run docker-start

Now in your console you can run the mutation with GraphQL.

See the tutorial for creating a native mutation in PostgreSQL here.

In MongoDB, you can create a native operation by using an aggregation pipeline in a JSON file by adding it to your connector's directory.

See the syntax for MongoDB native operations here.

Store your native operation in an appropriate directory, possibly named for the operation type, in your connector's directory.

ddn connector introspect <connector_name>

ddn connector show-resources <connector_name>

ddn command add <connector_name> <operation_name>

ddn supergraph build local

ddn run docker-start

Now in your console you can run the operation with GraphQL.

See the tutorial for creating a native operation in MongoDB here.

Commands are currently not supported on ClickHouse

You can run whatever arbitrary code you want in your TypeScript connector and expose it as a GraphQL mutation or query in your supergraph API.

ddn connector init my_ts -i

/**

* @readonly

*/

export function myCustomCode(myInput: string): string {

// Do something with the input

return "My output";

}

By adding the @readonly tag, we are indicating that this function is a read-only operation to be exposed as an NDC

function which will ulitmately show up as a GraphQL query. Leaving the tag off will expose the function as an NDC

procedure which will be a GraphQL mutation.

ddn connector introspect my_ts

ddn command add my_ts myCustomCode

ddn supergraph build local

ddn run docker-start

ddn console --local

query MyCustomCode {

myCustomCode(myInput: "My input")

}

{

"data": {

"myCustomCode": "My output"

}

}

You can run whatever arbitrary code you want in your Python connector and expose it as a GraphQL mutation or query in your supergraph API.

ddn connector init my_py -i

from hasura_ndc import start

from hasura_ndc.function_connector import FunctionConnector

connector = FunctionConnector()

@connector.register_query

def my_custom_code(my_input: str) -> str:

# Do something with the input

return "My output"

if __name__ == "__main__":

start(connector)

By adding the @connector.register_query decorator, we are indicating that this function is to be exposed as an NDC

function which will ultimately show up as a GraphQL query. If you use @connector.register_mutation instead, the

function will be exposed as an NDC procedure which will be a GraphQL mutation.

ddn connector introspect my_py

ddn command add my_py my_custom_code

ddn supergraph build local

ddn run docker-start

ddn console --local

query MyCustomCode {

myCustomCode(myInput: "My input")

}

{

"data": {

"myCustomCode": "My output"

}

}

You can run whatever arbitrary code you want in your Go connector and expose it as a GraphQL mutation or query in your supergraph API.

ddn connector init my_go -i

package functions

import (

"context"

"hasura-ndc.dev/ndc-go/types"

)

// InputArguments represents the input of the native operation.

type InputArguments struct {

MyInput string `json:"myInput"`

}

// OutputResult represents the output of the native operation.

type OutputResult struct {

MyOutput string `json:"myOutput"`

}

// ProcedureCustomCode is a native operation that can be called from the API.

func ProcedureCustomCode(ctx context.Context, state *types.State, arguments *InputArguments) (*OutputResult, error) {

// Do something with the input

return &OutputResult{

MyOutput: "My output",

}, nil

}

Using the prefix Procedure ensures ProcedureCustomCode() is exposed as a mutation in our API. Prefixing with

Function identifies it as a function to be exposed as a query in your API.

Both have typed input arguments and return strings, which the connector will use to generate the corresponding GraphQL schema.

ddn connector introspect my_go

ddn command add my_go customCode

ddn supergraph build local

ddn run docker-start

ddn console --local

query MyCustomCode {

customCode(myInput: "My input")

}

{

"data": {

"myCustomCode": "My output"

}

}

You can now build your supergraph API, serve it, and execute your commands.

For a walkthrough on how to create a command, see the Command Tutorials section below.

Update a command

If you want to update your command to reflect a change that happened in the underlying data source you should first introspect to get the latest resources and then update the relevant command.

ddn connector introspect <connector_name>

ddn command update <connector_link_name> <command_name>

You will see an output which explains how new resources were added or updated in the command.

You can now build your supergraph API, serve it, and execute your commands with the updated definitions.

You can also update the command by editing the command's metadata manually.

Delete a command

ddn command remove <command_name>

Along with the command itself, the associated metadata is also removed.

Tutorials

The tutorials below follow on from each particular tutorial in the How to Build with DDN section. Select the relevant data connector to follow the tutorial.

Creating a command

To modify data, you first need to create a command that maps to a specific operation within your data source.

From a source operation

- PostgreSQL

- MongoDB

- ClickHouse

First, show a list of resouces identified by the connector.

ddn connector introspect <connector_name>

ddn connector show-resources my_pg

You will see a list of Commands (Mutations) which have been automatically detected by the connector since they are defined by a foreign key relationship in the database.

ddn command add my_pg insert_users

Rebuild your supergraph API.

ddn supergraph build local

Serve your build.

ddn run docker-start

ddn console --local

Run the mutation:

mutation InsertUser {

insertUsers(objects: { age: "21", name: "Sean" }, postCheck: {}) {

affectedRows

returning {

id

name

age

}

}

}

{

"data": {

"insertUsers": {

"affectedRows": 1,

"returning": [

{

"id": 4,

"name": "Sean",

"age": 21

}

]

}

}

}

The MongoDB data connector defines custom commands via native mutations.

Within your connector's directory, you can add a new JSON configuration file to define a native mutation.

mkdir -p app/connector/my_mongo/native_mutations/

// native_mutations/insert_user.json

{

"name": "insertUser",

"description": "Inserts a user record into the database",

"arguments": {

"name": { "type": { "scalar": "string" } }

},

"resultType": {

"object": "InsertUser"

},

"objectTypes": {

"InsertUser": {

"fields": {

"ok": { "type": { "scalar": "double" } },

"n": { "type": { "scalar": "int" } }

}

}

},

"command": {

"insert": "users",

"documents": [{ "name": "{{ name }}" }]

}

}

ddn connector introspect my_mongo

ddn connector show-resources my_mongo

ddn command add my_mongo insertUser

Rebuild your supergraph API.

ddn supergraph build local

Serve your build.

ddn run docker-start

ddn console --local

Run the mutation:

mutation InsertUser {

insertUser(name: "Sam") {

ok

n

}

}

{

"data": {

"insertUser": {

"ok": 1,

"n": 1

}

}

}

Commands are currently not supported on ClickHouse.

Via native operations

- PostgreSQL

- MongoDB

- ClickHouse

- Node.js TypeScript

- Python

- Go

Within your connector's directory, you can add a new file with a .sql extension to define a native mutation.

mkdir -p app/connector/my_pg/native_operations/mutations/

Let's create a mutation using a SQL UPDATE statement that updates the title of all posts from user's of that age by

appending their age to the title.

-- native_operations/mutations/update_post_titles_by_age.sql

UPDATE posts

SET title = CASE

WHEN title ~ ' - age \d+$' THEN regexp_replace(title, ' - age \d+$', ' - age ' || {{ age }})

ELSE title || ' - age ' || {{ age }}

END

FROM users

WHERE posts.user_id = users.id

AND users.age = {{ age }}

RETURNING

posts.id,

posts.title,

posts.user_id,

users.name,

users.age;

Arguments are passed to the native mutation as variables surrounded by double curly braces {{ }}.

ddn connector plugin \

--connector app/connector/my_pg/connector.yaml \

-- \

native-operation create \

--operation-path native_operations/mutations/update_post_titles_by_age.sql \

--kind mutation

ddn connector introspect my_pg

ddn connector show-resources my_pg

ddn command add my_pg update_post_titles_by_age

ddn supergraph build local

ddn run docker-start

Now in your console you can run the following mutation to see the results:

mutation UpdatePostTitlesByAge {

updatePostTitlesByAge(age: "25") {

affectedRows

returning {

id

title

}

}

}

{

"data": {

"updatePostTitlesByAge": {

"affectedRows": 2,

"returning": [

{

"id": 1,

"title": "My First Post - age 25"

},

{

"id": 2,

"title": "Another Post - age 25"

}

]

}

}

}

Let's create a native mutation that adds a new user to the database with a name and age using an aggregation pipeline in a JSON file.

See the syntax for MongoDB native operations here.

mkdir -p app/connector/my_mongo/native_operations/mutations/

// native_mutations/create_user.json

{

"name": "createUser",

"description": "Create a new user with name and age",

"resultType": {

"object": "CreateUserResult"

},

"arguments": {

"name": {

"type": {

"scalar": "string"

}

},

"age": {

"type": {

"scalar": "int"

}

}

},

"objectTypes": {

"CreateUserResult": {

"fields": {

"ok": {

"type": {

"scalar": "int"

}

},

"n": {

"type": {

"scalar": "int"

}

}

}

}

},

"command": {

"insert": "users",

"documents": [

{

"name": "{{ name }}",

"age": "{{ age }}",

"user_id": {

"$size": {

"$ifNull": [

{

"$objectToArray": "$$ROOT"

},

[]

]

}

}

}

]

}

}

ddn connector introspect my_mongo

ddn connector show-resources my_mongo

ddn command add my_mongo createUser

ddn supergraph build local

ddn run docker-start

Now in your console you can run the following query to see the results:

mutation CreateUser {

createUser(age: 25, name: "Peter") {

n

ok

}

}

{

"data": {

"createUser": {

"n": 1,

"ok": 1

}

}

}

Commands are currently not supported on ClickHouse

ddn connector init my_ts -i

/**

* @readonly

*/

export function shoutName(name: string): string {

return name.toUpperCase();

}

ddn connector introspect my_ts

ddn command add my_ts shoutName

ddn supergraph build local

ddn run docker-start

ddn console --local

query ShoutTheName {

shoutName(name: "Alice")

}

{

"data": {

"shoutName": "ALICE"

}

}

ddn connector init my_python -i

from hasura_ndc import start

from hasura_ndc.function_connector import FunctionConnector

connector = FunctionConnector()

@connector.register_query

def shout_name(name: str) -> str:

return name.upper()

if __name__ == "__main__":

start(connector)

ddn connector introspect my_python

ddn command add my_python shout_name

ddn supergraph build local

ddn run docker-start

ddn console --local

query ShoutTheName {

shoutName(name: "Alice")

}

{

"data": {

"shoutName": "ALICE"

}

}

ddn connector init my_go -i

package functions

import (

"context"

"fmt"

"hasura-ndc.dev/ndc-go/types"

"strings"

)

// NameArguments defines the input arguments for the function

type NameArguments struct {

Name string `json:"name"` // required argument

}

// NameResult defines the output result for the function

type NameResult string

// FunctionShoutName converts a name string to uppercase

func FunctionShoutName(ctx context.Context, state *types.State, arguments *NameArguments) (*NameResult, error) {

if arguments.Name == "" {

return nil, fmt.Errorf("name cannot be empty")

}

upperCaseName := NameResult(strings.ToUpper(arguments.Name))

return &upperCaseName, nil

}

ddn connector introspect my_go

ddn command add my_go shoutName

ddn supergraph build local

ddn run docker-start

ddn console --local

query ShoutTheName {

shoutName(name: "Alice")

}

{

"data": {

"shoutName": "ALICE"

}

}

Lambda connectors allow you to execute custom business logic directly via your API. You can learn more about Lambda connectors in the docs.

Updating a command

Your underlying data source may change over time. You can update your command to reflect these changes.

- PostgreSQL

- MongoDB

- ClickHouse

- Node.js TypeScript

- Python

- Go

For an automatically detected command, you can update the command metadata.

ddn connector introspect my_pg

ddn connector show-resources my_pg

ddn command update my_pg "*"

For example if you have a native mutation that inserts a user you can modify it to include a second field on new documents.

// native_mutations/create_user.json

{

"name": "createUser",

"description": "Create a new user with name and age",

"resultType": {

"object": "CreateUserResult"

},

"arguments": {

"name": {

"type": {

"scalar": "string"

}

},

"age": {

"type": {

"scalar": "int"

}

},

"role": {

"type": {

"nullable": {

"scalar": "string"

}

}

} // add an argument

},

"objectTypes": {

"CreateUserResult": {

"fields": {

"ok": {

"type": {

"scalar": "int"

}

},

"n": {

"type": {

"scalar": "int"

}

}

}

}

},

"command": {

"insert": "users",

"documents": [

{

"name": "{{ name }}",

"age": "{{ age }}",

"role": "{{ role }}",

"user_id": {

"$size": {

"$ifNull": [

{

"$objectToArray": "$$ROOT"

},

[]

]

}

}

}

]

}

}

ddn connector introspect my_mongo

ddn connector show-resources my_mongo

Then, either update the specific command or update all commands.

ddn command update my_mongo insertUser

ddn command update my_mongo "*"

Commands are currently not supported on ClickHouse.

You can update the command metadata for the Node.js lambda connector with the following workflow.

Make changes to the function in your editor, then run the following commands to update the command metadata.

ddn connector introspect my_ts

ddn connector show-resources my_ts

ddn command update my_ts shoutName

ddn command update my_ts "*"

You can update the command metadata for the Python lambda connector with the following workflow.

Make changes to the function in your editor, then run the following commands to update the command metadata.

ddn connector introspect my_py

ddn connector show-resources my_py

ddn command update my_py shout_name

ddn command update my_py "*"

You can update the command metadata for the Go lambda connector with the following workflow.

Make changes to the function in your editor, then run the following commands to update the command metadata.

ddn connector introspect my_go

ddn connector show-resources my_go

ddn command update my_go shoutName

ddn command update my_go "*"

Deleting a command

ddn command remove <command_name>

Reference

You can learn more about commands in the metadata reference docs.