Models Read Data

Introduction

In DDN, models represent entities or collections that can be queried in your data sources, such as tables, views, collections, native queries, and more.

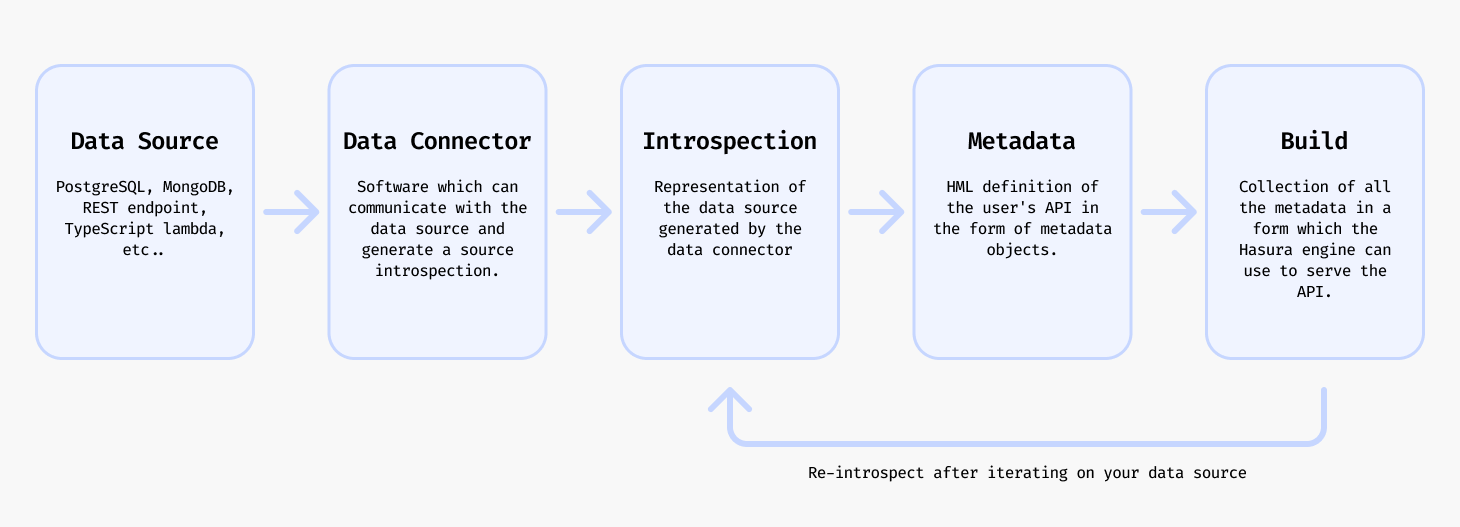

Lifecycle

The lifecycle in creating a model in your metadata is as follows:

- Have some entity in your data source that you want to make queryable via your API.

- Introspect your data source using the DDN CLI with the relevant data connector to fetch the entity resources.

- Add the model to your metadata with the DDN CLI.

- Create a build of your supergraph API with the DDN CLI.

- Serve your build as your API with the Hasura engine either locally or in the cloud.

- Iterate on your API by repeating this process or by editing your metadata manually as needed.

Create a model

To add a model you will need to have a data connector already set up and connected to the data source. Follow the relevant tutorial for your data source in How to Build with DDN to get to that point.

From a source entity

ddn connector introspect <connector_name>

Whenever you update your data source, you can run the above command to fetch the latest resources.

ddn connector show-resources <connector_name>

This is an optional step and will output a list of resources that DDN discovered in your data source in the previous step.

ddn model add <connector_link_name> <collection_name>

Or you can optionally add all the models by specifying "*".

ddn model add <connector_link_name> "*"

This will add models with their accompanying metadata definitions to your metadata.

You can now build your supergraph API, serve it, and query your data.

Note that the above CLI commands work without also adding the relevant subgraph to the command with the --subgraph

flag because this has been set in the CLI context. You can learn more about creating and switching contexts in the

CLI context section.

For a walkthrough on how to create a model, see the Model Tutorials section.

Via native query

You can use the query syntax of the underlying data source to create a native query and expose it as a model in your supergraph API.

The process of adding a native query is more or less unique for each data connector as each data source by nature has its own syntax.

- PostgreSQL

- MongoDB

- ClickHouse

The process for adding a native query for PostgreSQL is:

- Create a new SQL file in your connector directory.

- Name the file with how you want to reference it in your supergraph API.

- Add the SQL for your native query to the file.

- Use the DDN CLI plugin for the PostgreSQL connector to add the native query to the connector's configuration eg:

ddn connector plugin \

--connector subgraph_name/connector/connector_name/connector.yaml \

-- native-operation create \

--operation-path path/to/sql_file_name.sql \

--kind query

- Introspect your PostgreSQL data connector to fetch the latest resources.

ddn connector introspect <connector_name>

- Show the found resources to see the new native query.

ddn connector show-resources <connector_name>

- Add the model for the native query.

ddn model add <connector_link_name> <model_name>

- Rebuild and serve your supergraph API.

Find out more about native queries for PostgreSQL here.

The process for adding a native query for MongoDB is:

- Create a new MongoDB aggregation pipeline which defines the native query in your connector's directory.

- Name the file with how you want to reference it in your supergraph API.

- Use the DDN CLI plugin for the MongoDB connector to add the aggregation pipeline to the connector's configuration eg:

ddn connector plugin \

--connector subgraph_name/connector/connector_name/connector.yaml \

-- native-query create path/to/aggregation_pipeline_filename.json \

--collection collection_name

- Introspect your MongoDB data connector to fetch the latest resources.

ddn connector introspect <connector_name>

- Show the found resources to see the new native query.

ddn connector show-resources <connector_name>

- Add the model for the native query.

ddn model add <connector_link_name> <model_name>

- Rebuild and serve your supergraph API.

Find out more about native queries for MongoDB here.

The process for adding a native query for ClickHouse is:

- Create a new SQL file in your connector directory.

- Use ClickHouse parameter syntax in the SQL file to define arguments.

- Create a JSON configuration file in your connector directory specifying the SQL file path and the return type.

{

"tables": {},

"queries": {

"Name": {

"exposed_as": "collection",

"file": "path/to/sql_file_name.sql",

"return_type": {

"kind": "definition",

"columns": {

"column_name": "column_type"

}

}

}

}

}

- Introspect your ClickHouse data connector to fetch the latest resources.

ddn connector introspect <connector_name>

- Show the found resources to see the new native query.

ddn connector show-resources <connector_name>

- Add the model for the native query.

ddn model add <connector_link_name> <model_name>

- Rebuild and serve your supergraph API.

Find out more about native queries for ClickHouse here.

Update a model

If you want to update your model to reflect a change that happened in the underlying data source you should first introspect to get the latest resources and then update the relevant model.

ddn connector introspect <connector_name>

ddn model update <connector_link_name> <model_name>

You will see an output which explains how new resources were added or updated in the model.

You can now build your supergraph API, serve it, and query your data with the updated model.

You can also update the model by editing the metadata manually.

For a walkthrough on how to update a model, see the Model Tutorials section.

Extend a model

A model can be extended in order to return nested data or to enrich or add to the data.

For example you can extend a model like Customers to also return the related Orders for each customer.

Or you can add a custom piece of logic on a model like Orders to compute and return the current currency conversion of

the total price of the order.

The way this is done is via a Relationship. Read more about

creating relationships here.

Delete a model

ddn model remove users

In addition to removing the Model object itself, the DDN CLI will also remove the associated metadata definitions.

Tutorials

The tutorials below follow on from each particular tutorial in the How to Build with DDN section. Select the relevant data connector to follow the tutorial.

Creating a model

To query data from your API, you'll first need to create a model that represents that data.

From a source entity

- PostgreSQL

- MongoDB

- ClickHouse

Via a new table or view

CREATE TABLE public.comments (

id serial PRIMARY KEY,

comment text NOT NULL,

user_id integer NOT NULL,

post_id integer NOT NULL

);

INSERT INTO public.comments (comment, user_id, post_id)

VALUES

('Great post! Really enjoyed reading this.', 1, 2),

('Thanks for sharing your thoughts!', 2, 1),

('Interesting perspective.', 3, 1);

ddn connector introspect my_pg

ddn model add my_pg comments

ddn supergraph build local

ddn run docker-start

ddn console --local

query {

comments {

id

comment

user_id

post_id

}

}

{

"data": {

"comments": [

{

"id": 1,

"comment": "Great post! Really enjoyed reading this.",

"user_id": 1,

"post_id": 2

},

{

"id": 2,

"comment": "Thanks for sharing your thoughts!",

"user_id": 2,

"post_id": 1

},

{

"id": 3,

"comment": "Interesting perspective.",

"user_id": 3,

"post_id": 1

}

]

}

}

Via a new collection

db.createCollection("comments");

db.comments.insertMany([

{

comment_id: 1,

comment: "Great post! Really enjoyed reading this.",

user_id: 1,

post_id: 2,

},

{

comment_id: 2,

comment: "Thanks for sharing your thoughts!",

user_id: 2,

post_id: 1,

},

{

comment_id: 3,

comment: "Interesting perspective.",

user_id: 3,

post_id: 1,

},

]);

ddn connector introspect my_mongo

ddn model add my_mongo comments

ddn supergraph build local

ddn run docker-start

ddn console --local

query {

comments {

comment_id

comment

user_id

post_id

}

}

{

"data": {

"comments": [

{

"comment_id": 1,

"comment": "Great post! Really enjoyed reading this.",

"user_id": 1,

"post_id": 2

},

{

"comment_id": 2,

"comment": "Thanks for sharing your thoughts!",

"user_id": 2,

"post_id": 1

},

{

"comment_id": 3,

"comment": "Interesting perspective.",

"user_id": 3,

"post_id": 1

}

]

}

}

Via a new table or view

CREATE TABLE comments (

id UInt32,

comment String,

user_id UInt32,

post_id UInt32

)

ENGINE = MergeTree()

ORDER BY id;

INSERT INTO comments (id, comment, user_id, post_id) VALUES

(1, 'Great post! Really enjoyed reading this.', 1, 2),

(2, 'Thanks for sharing your thoughts!', 2, 1),

(3, 'Interesting perspective.', 3, 1);

ddn connector introspect my_clickhouse

ddn model add my_clickhouse comments

ddn supergraph build local

ddn run docker-start

ddn console --local

query {

comments {

id

comment

user_id

post_id

}

}

{

"data": {

"comments": [

{

"id": 1,

"comment": "Great post! Really enjoyed reading this.",

"user_id": 1,

"post_id": 2

},

{

"id": 2,

"comment": "Thanks for sharing your thoughts!",

"user_id": 2,

"post_id": 1

},

{

"id": 3,

"comment": "Interesting perspective.",

"user_id": 3,

"post_id": 1

}

]

}

}

Via native query

- PostgreSQL

- MongoDB

- ClickHouse

Within your connector's directory, you can add a new file with a .sql extension to define a native query.

mkdir -p app/connector/my_pg/native_operations/queries/

-- native_operations/queries/order_users_of_same_age.sql

SELECT

id,

name,

age,

RANK() OVER (PARTITION BY age ORDER BY name ASC) AS rank_within_age

FROM

users

WHERE

age = {{ age }}

Arguments are passed to the native query as variables surrounded by double curly braces {{ }}.

ddn connector plugin \

--connector app/connector/my_pg/connector.yaml \

-- \

native-operation create \

--operation-path native_operations/queries/order_users_of_same_age.sql \

--kind query

ddn connector introspect my_pg

ddn connector show-resources my_pg

ddn model add my_pg order_users_of_same_age

Let's add a few more users to make this native query example more interesting:

INSERT INTO users (name, age) VALUES ('Dan', 25), ('Erika', 25), ('Fatima', 25), ('Gabe', 25);

ddn supergraph build local

ddn run docker-start

Now in your console you can run the following query to see the results:

query UsersOfSameAge {

orderUsersOfSameAge(args: { age: 25 }) {

id

name

age

orderWithinAge

}

}

Let's create a native query that ranks users within their age group by name using an aggregation pipeline.

mkdir -p <my_subgraph>/connector/<connector_name>/native_queries/

// native_queries/users_ranked_by_age.json

{

"name": "usersRankedByAge",

"representation": "collection",

"description": "Rank users within their age group by name",

"inputCollection": "users",

"arguments": {

"age": { "type": { "scalar": "int" } }

},

"resultDocumentType": "UserRank",

"objectTypes": {

"UserRank": {

"fields": {

"_id": { "type": { "scalar": "objectId" } },

"name": { "type": { "scalar": "string" } },

"age": { "type": { "scalar": "int" } },

"rank": { "type": { "scalar": "int" } }

}

}

},

"pipeline": [

{

"$match": {

"age": "{{ age }}"

}

},

{

"$setWindowFields": {

"partitionBy": "$age",

"sortBy": { "name": 1 },

"output": {

"rank": {

"$rank": {}

}

}

}

}

]

}

This query will return a list of users sorted by age, and within each age group, sorted by name.

ddn connector introspect <connector_name>

ddn connector show-resources <connector_name>

ddn model add <connector_name> usersRankedByAge

We can insert some more users to make the query result more interesting:

docker exec -it mongodb mongosh my_database --eval "

db.users.insertMany([

{ user_id: 1, name: 'Dinesh', age: 25 },

{ user_id: 2, name: 'Bertram', age: 25 },

{ user_id: 3, name: 'Erlich', age: 25 }

]);

"

In the console, run the following query:

query MyQuery {

usersRankedByAge(args: { age: 25 }) {

name

age

rank

id

}

}

You should see the following output:

{

"data": {

"usersRankedByAge": [

{

"name": "Alice",

"age": 25,

"rank": 1,

"id": "67ae6b1225e762d63aa00aa1"

},

{

"name": "Bertram",

"age": 25,

"rank": 2,

"id": "67ae85a5a437b6a167a00aa1"

},

{

"name": "Dinesh",

"age": 25,

"rank": 3,

"id": "67ae85a5a437b6a167a00aa3"

},

{

"name": "Erlich",

"age": 25,

"rank": 4,

"id": "67ae85a5a437b6a167a00aa2"

}

]

}

}

Within your connector's directory, you can add a new SQL configuration file to define a native query.

mkdir -p <my_subgraph>/connector/<connector_name>/queries/

// queries/UsersByName.sql

SELECT *

FROM "default"."users"

WHERE "users"."name" = {name: String}

Note this uses the ClickHouse parameter syntax

// configuration.json

{

"tables": {},

"queries": {

"UserByName": {

"exposed_as": "collection",

"file": "queries/UserByName.sql",

"return_type": {

"kind": "definition",

"columns": {

"id": "Int32",

"name": "String"

}

}

}

}

}

ddn connector introspect <connector_name>

ddn model add <connector_name> UserByName

Updating a model

Your underlying data source may change over time. You can update your model to reflect these changes.

You'll need to update the mapping of your model to the data source by updating the DataConnectorLink object.

ddn connector introspect <connector_name>

ddn model update <connector_link_name> <model_name>

This will find changed resources in the data source and attempt to merge them into the model.

If you'd like to completely add the model again, you can first run the model remove command (below) and then re-create

your model.

Extending a model

Find tutorials about extending a model with related information or custom logic in the Relationships section.

Deleting a model

ddn model remove users

Reference

You can learn more about models in the metadata reference docs.