Self-Hosted

Introduction

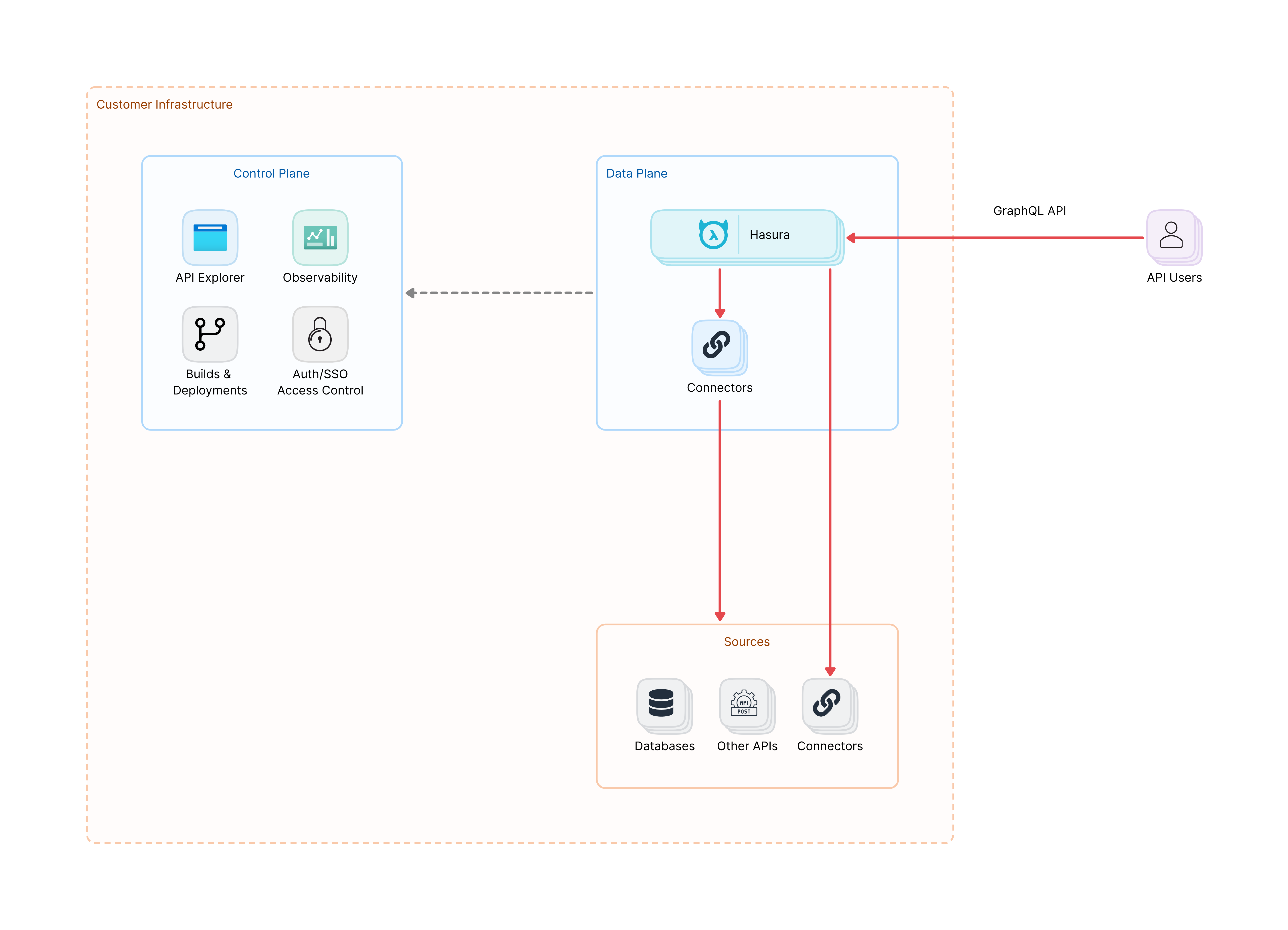

For customers with strict security and compliance requirements, Private DDN Self-Hosted allows you to host both the Control Plane and Data Plane on your own infrastructure.

This is a premium offering where the Hasura team will be helping you with setting up the entire DDN Platform organization-wide. An enterprise license is required for this offering.

If you would like access to Private DDN Self-Hosted, please contact sales.

Architecture

In self-hosted mode, the Control Plane and Data Plane are running on customer's cloud account, managed by customer's own operations team, with the help of the Hasura team. Uptime and reliability of the Data Plane is the responsibility of the customer's infrastrucure team.

A Kubernetes cluster is required for installing DDN.

Get started

To get started with Hasura DDN in your own infrastructure, contact sales.